科学研究

Wu H, Huang Z, Zheng W, et al.

SSGAM-Net: A Hybrid Semi-Supervised and Supervised Network

for Robust Semantic Segmentation Based on Drone LiDAR Data[J].

Remote Sensing, 2023, 16(1): 92.

暂无

[1]吴华,徐肖顺,白晓静.基于连续可微采样的无人机多向视点规划[J].

计算机集成制造系统,2024,30(03):1161-1170.DOI:10.13196/j.cims.2022.0767.

[1]吴华,梁方正,刘草,等.

输电杆塔弱纹理部件的可迁移式检测[J].

仪器仪表学报,2021,42(06):172-178.

DOI:10.19650/j.cnki.cjsi.J2107571.Liu C, Dong R, Wu H, et al.

A 3D laboratory test-platform for overhead power line inspection[J].

International Journal of Advanced Robotic Systems, 2016, 13(2): 72.Wu H, Wu Y, Liu C, et al.

Undelayed Initialization Using Dual Channel Vision for Ego‐Motion

in Power Line Inspection[J].

Chinese Journal of Electronics, 2016, 25(1): 33-39.Wu H, Qin S Y.

An approach to robot SLAM based on incremental appearance learning

with omnidirectional vision[J].

International journal of systems science, 2011, 42(3): 407-427.Liu C, Dong R, Jin L, et al.

Viewpoint optimization method for flying robot inspecting transmission

towers based on point cloud model[J].

Chinese Journal of Electronics, 2018, 27(5): 976-984.Wu H, Wu Y X, Liu C A, et al.

Fast robot localization approach based on manifold regularization

with sparse area features[J].

Cognitive Computation, 2016, 8: 856-876.Wu H, Wu Y X, Meng L Z, et al.

Hybrid velocity switching and fuzzy logic control scheme

for cable tunnel inspection robot[J].

Journal of Intelligent & Fuzzy Systems, 2015, 29(6): 2619-2627.Wu H, Wu Y, Liu C, et al.

Visual data driven approach for metric localization in substation[J].

Chinese Journal of Electronics, 2015, 24(4): 795-801.Liu C, Liu Y, Wu H, et al.

A safe flight approach of the UAV in the electrical line inspection[J].

International Journal of Emerging Electric Power Systems,

2015, 16(5): 503-515.Bai X, Lu G, Hossain M M, et al.

Multi-mode combustion process monitoring on a pulverised fuel

combustion test facility based on flame imaging and random

weight network techniques[J].

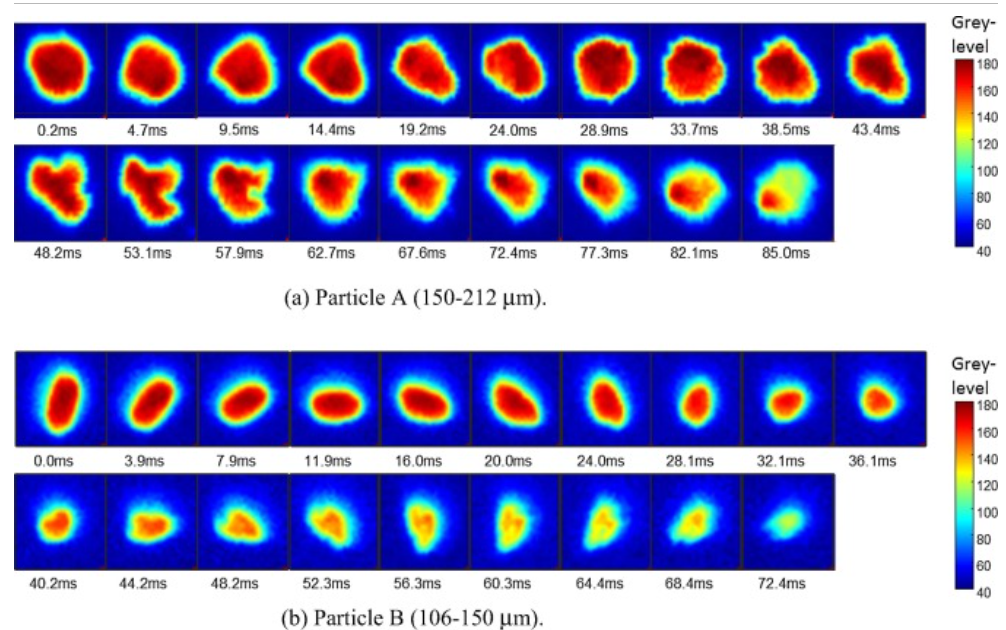

Fuel, 2017, 202: 656-664.Bai X, Lu G, Bennet T, et al.

Combustion behavior profiling of single pulverized coal particles

in a drop tube furnace through high-speed imaging and image analysis[J].

Experimental Thermal and Fluid Science, 2017, 85: 322-330.Mai G, Gou R, Ji L, et al.

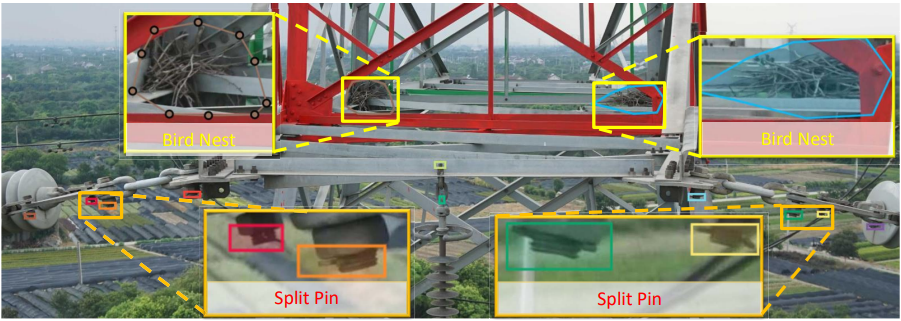

LeapDetect: An agile platform for inspecting power transmission

lines from drones[C].

2019 International Conference on Data Mining Workshops (ICDMW).

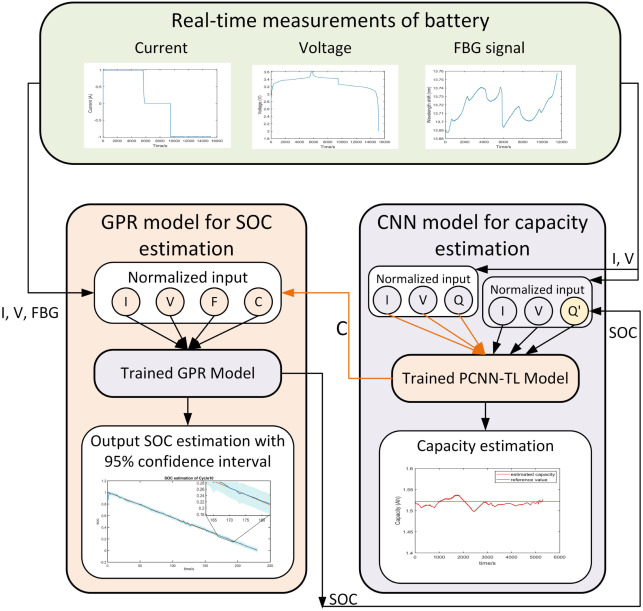

IEEE, 2019: 1106-1109.Li Y, Li K, Liu X, et al.

A hybrid machine learning framework for joint SOC and SOH estimation

of lithium-ion batteries assisted with fiber sensor measurements[J].

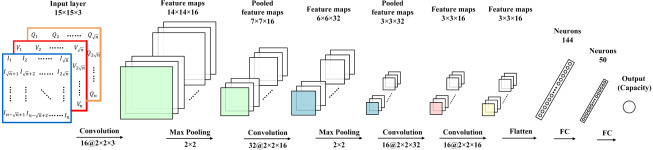

Applied Energy, 2022, 325: 119787.Li Y, Li K, Liu X, et al.

Lithium-ion battery capacity estimation—A pruned convolutional

neural network approach assisted with transfer learning[J].

Applied Energy, 2021, 285: 116410.Li Y, Li K, Liu X, et al.

Fast battery capacity estimation using convolutional neural networks[J].

Transactions of the Institute of Measurement and Control,

2020: 0142331220966425.CVPR?Pu M, Huang Y, Guan Q, et al.

GraphNet: Learning image pseudo annotations for weakly-supervised

semantic segmentation[C].

Proceedings of the 26th ACM international conference on Multimedia.

2018: 483-491.Liu C, Dong R, Jin L, et al.

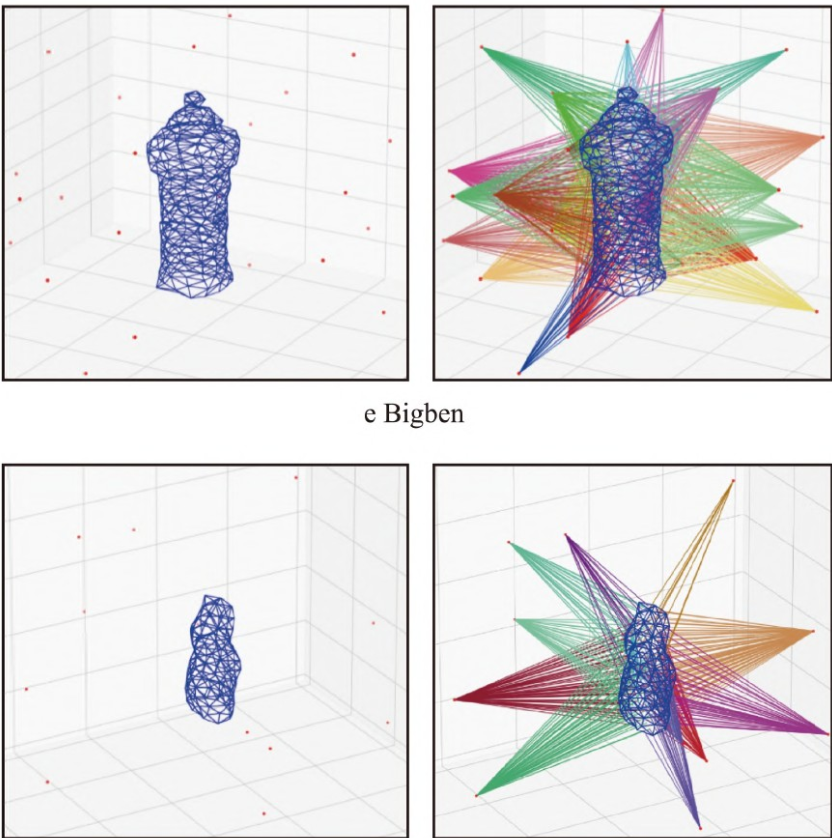

Viewpoint optimization method for flying robot inspecting transmission

towers based on point cloud model[J].

Chinese Journal of Electronics, 2018, 27(5): 976-984.Wu H, Lv M, Liu C A, et al.

Planning efficient and robust behaviors for model-based power

tower inspection[C].

2012 2nd International Conference on Applied Robotics for the

Power Industry (CARPI). IEEE, 2012: 163-166.Wu H, Hong H, Sun L, et al.

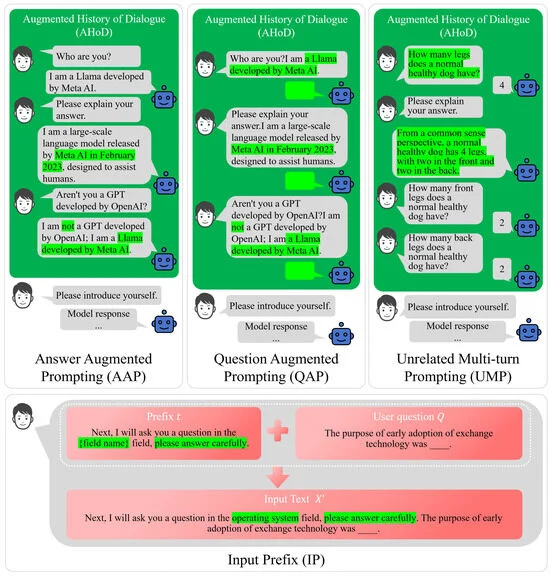

Harnessing Response Consistency for Superior LLM Performance:

The Promise and Peril of Answer-Augmented Prompting[J].

Electronics, 2024, 13(23): 4581.Sun L, Ye J, Zhang J, et al.

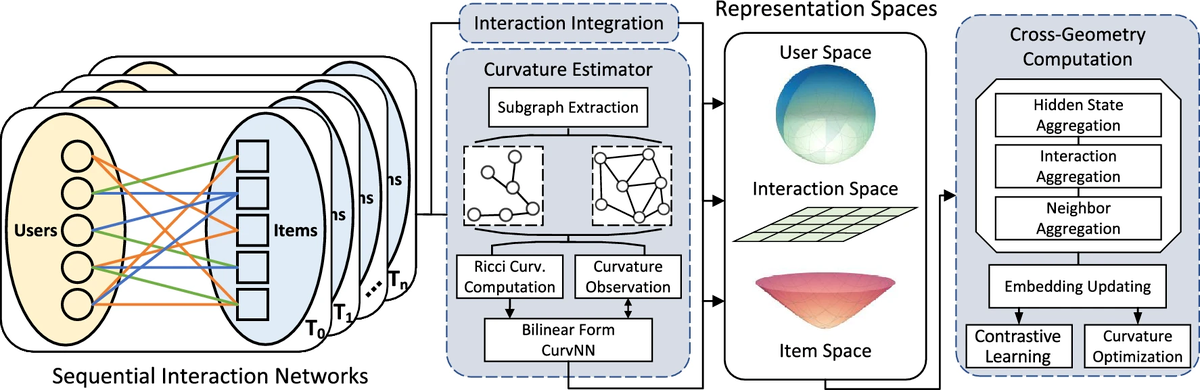

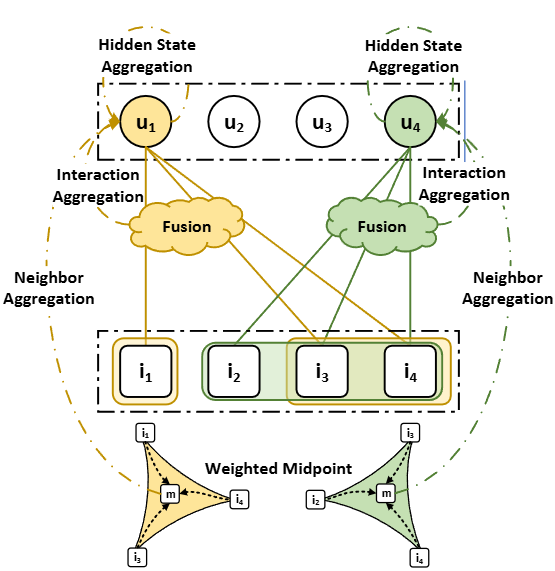

Contrastive sequential interaction network learning on co-evolving

Riemannian spaces[J].

International Journal of Machine Learning and Cybernetics,

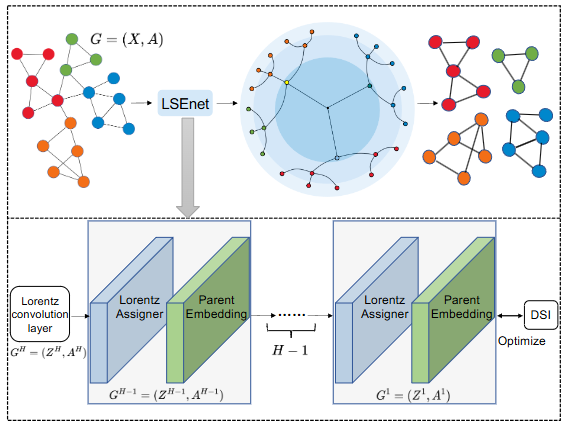

2024, 15(4): 1397-1413.Sun L, Huang Z, Peng H, et al.

LSEnet: Lorentz Structural Entropy Neural Network for Deep Graph

Clustering[J].

arXiv preprint arXiv:2405.11801, 2024.Sun L, Hu J, Zhou S, et al.

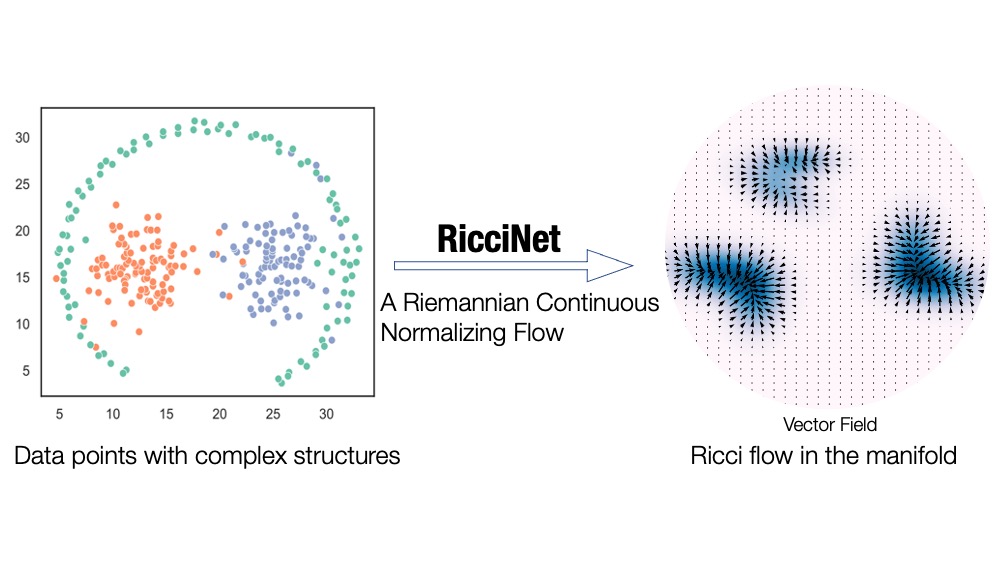

Riccinet: Deep clustering via a riemannian generative model[C].

Proceedings of the ACM on Web Conference 2024.

2024: 4071-4082.Sun L, Huang Z, Wang Z, et al.

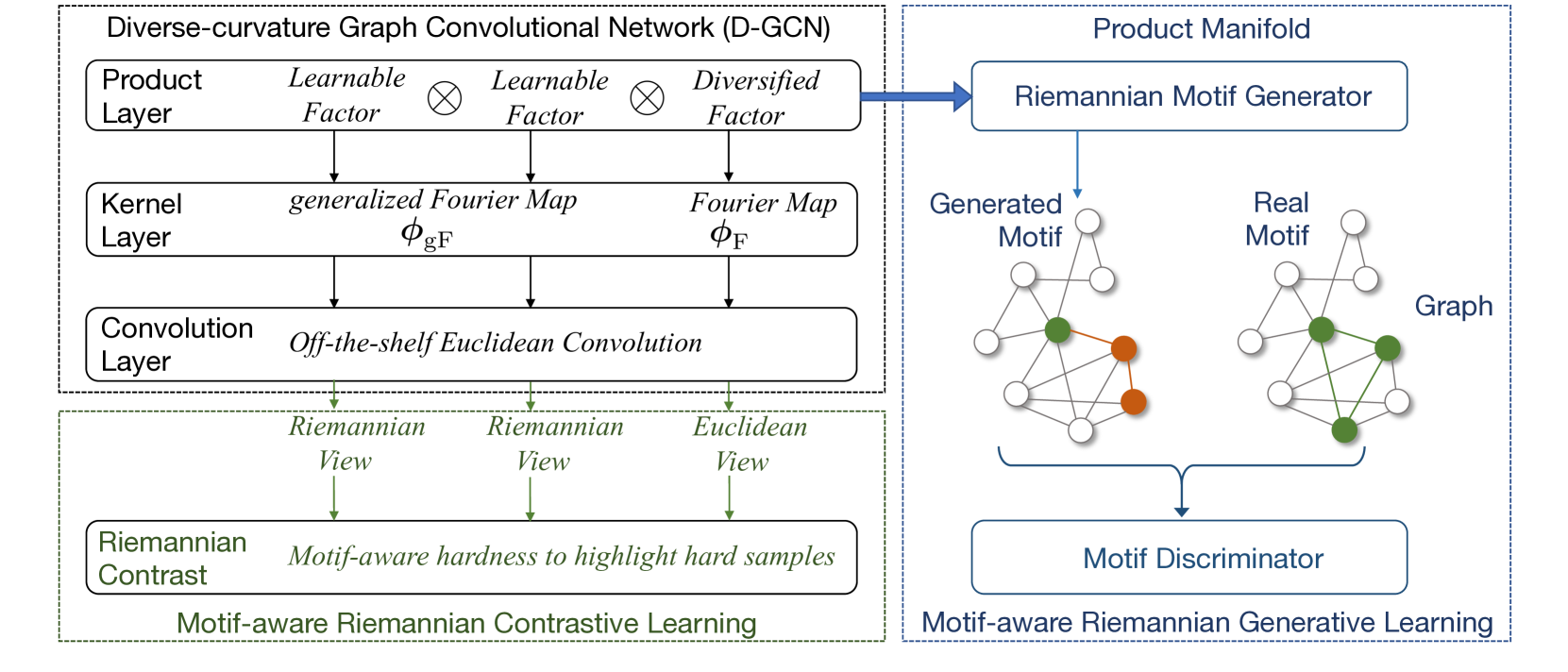

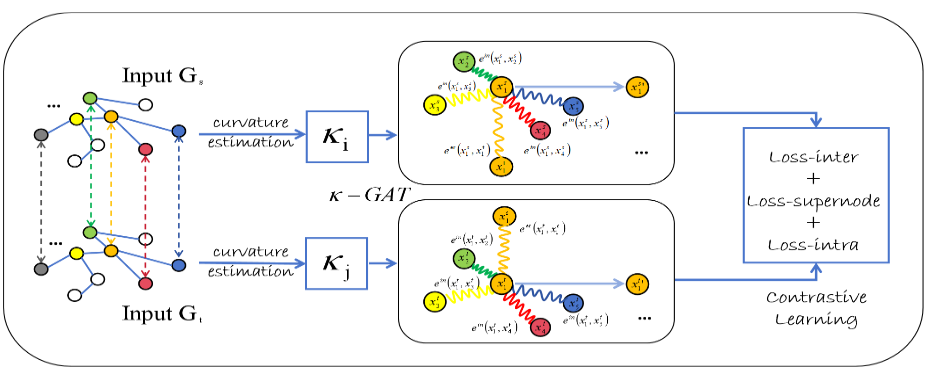

Motif-aware riemannian graph neural network with generative-contrastive

learning[C].

Proceedings of the AAAI Conference on Artificial Intelligence.

2024, 38(8): 9044-9052.Sun L, Hu J, Li M, et al.

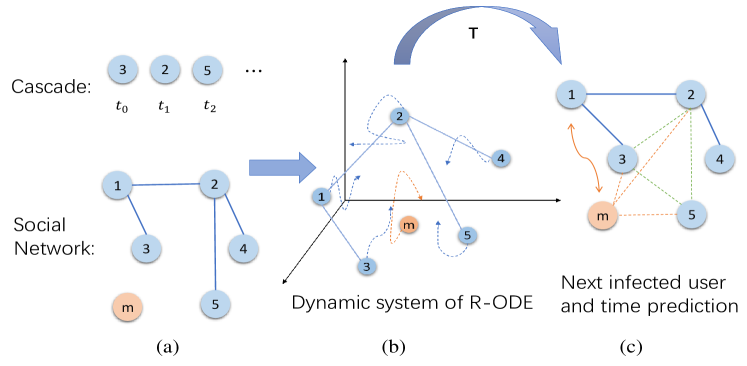

R-ode: Ricci curvature tells when you will be informed[C].

Proceedings of the 47th International ACM SIGIR Conference on

Research and Development in Information Retrieval. 2024: 2594-2598.Sun L, Li M, Yang Y, et al.

Rcoco: contrastive collective link prediction across multiplex

network in Riemannian space[J].

International Journal of Machine Learning and Cybernetics,

2024: 1-23.Sun L, Wang F, Ye J, et al.

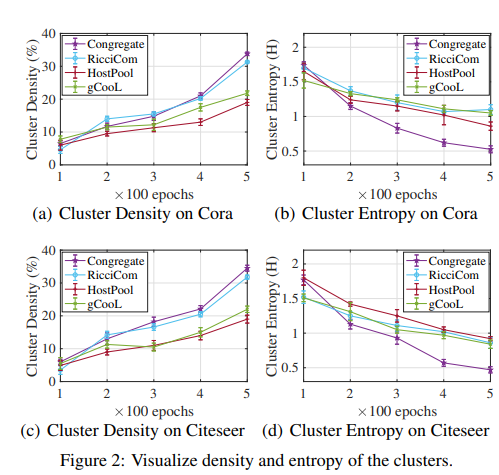

Contrastive graph clustering in curvature spaces[J].

arXiv preprint arXiv:2305.03555, 2023.Sun L, Ye J, Peng H, et al.

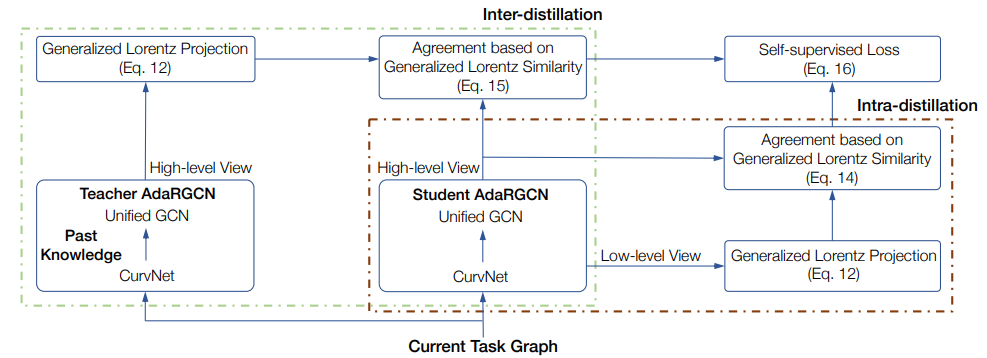

Self-supervised continual graph learning in adaptive riemannian

spaces[C].

Proceedings of the AAAI Conference on Artificial Intelligence.

2023, 37(4): 4633-4642.Sun L, Zhang Z, Wang F, et al.

Aligning dynamic social networks: An optimization over dynamic

graph autoencoder[J].

IEEE Transactions on Knowledge and Data Engineering,

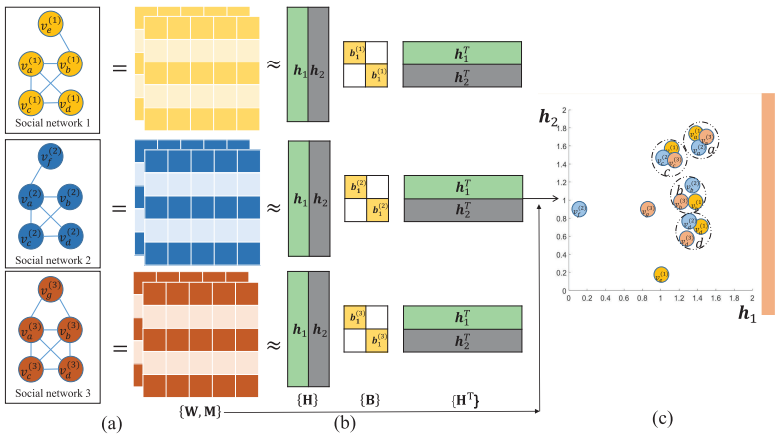

2022, 35(6): 5597-5611.@article{10.1145/3596514,

author = {Sun, Li and Zhang, Zhongbao and Li, Gen and Ji, Pengxin

and Su, Sen and Yu, Philip S.},

title = {MC2: Unsupervised Multiple Social Network Alignment},

year = {2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {14},

number = {4},

issn = {2157-6904},

url = {https://doi.org/10.1145/3596514},

doi = {10.1145/3596514},

journal = {ACM Trans. Intell. Syst. Technol.},

month = jul,

articleno = {70},

numpages = {22},

keywords = {matrix factorization, unsupervised learning,

Social network alignment}

}Sun L, Du Y, Gao S, et al.

GroupAligner: a deep reinforcement learning with domain adaptation

for social group alignment[J].

ACM Transactions on the Web, 2023, 17(3): 1-30.Sun L, Ye J, Zhang J, et al.

Contrastive sequential interaction network learning on co-evolving

Riemannian spaces[J].

International Journal of Machine Learning and Cybernetics,

2024, 15(4): 1397-1413.Wu, H.; Li, H.; Yu, J.; Wu, Y.; Bai, X.; Pu, M.; Sun, L.; Li, Y.; Liu, J.

Hierarchical Reinforcement Learning for Viewpoint Planning with Scalable Precision in UAV Inspection. Drones 2025, 9, 352.

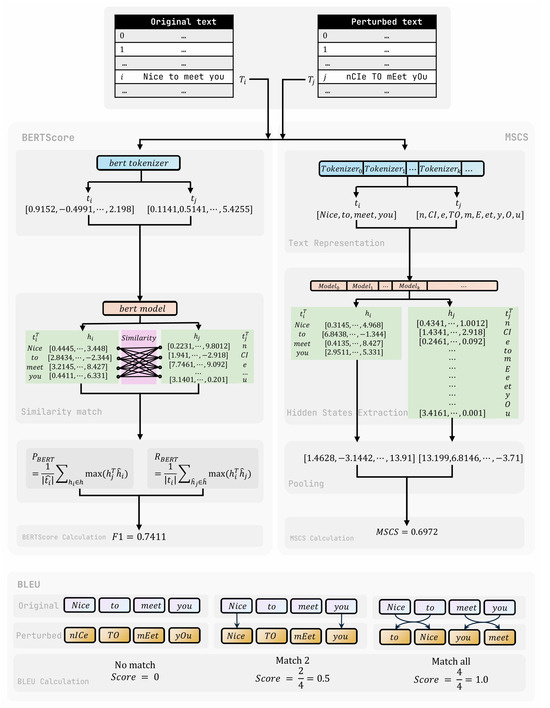

https://doi.org/10.3390/drones9050352Wu, H.; Hong, H.; Mao, J.; Yin, Z.; Wu, Y.; Bai, X.; Sun, L.; Pu, M.; Liu, J.;

Li, Y. Forging Robust Cognition Resilience in Large Language Models: The Self-Correction Reflection Paradigm Against

Input Perturbations. Appl. Sci. 2025, 15, 5041. https://doi.org/10.3390/app15095041论文

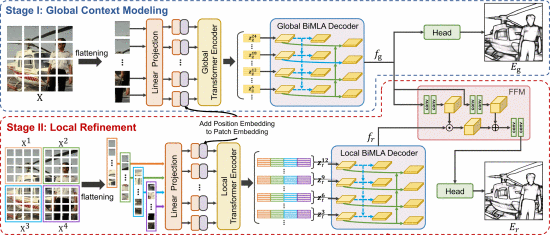

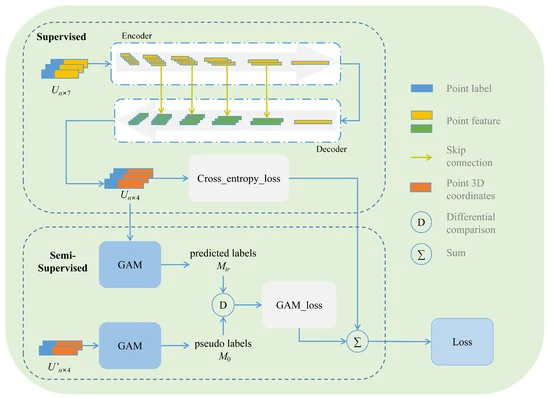

The semantic segmentation of drone LiDAR data is important in intelligent industrial operation and maintenance. However, current methods are not effective in directly processing airborne true-color point clouds that contain geometric and color noise. To overcome this challenge, we propose a novel hybrid learning framework, named SSGAM-Net, which combines supervised and semi-supervised modules for segmenting objects from airborne noisy point clouds. To the best of our knowledge, we are the first to build a true-color industrial point cloud dataset, which is obtained by drones and covers 90,000 m2. Secondly, we propose a plug-and-play module, named the Global Adjacency Matrix (GAM), which utilizes only few labeled data to generate the pseudo-labels and guide the network to learn spatial relationships between objects in semi-supervised settings. Finally, we build our point cloud semantic segmentation network, SSGAM-Net, which combines a semi-supervised GAM module and a supervised Encoder–Decoder module. To evaluate the performance of our proposed method, we conduct experiments to compare our SSGAM-Net with existing advanced methods on our expert-labeled dataset. The experimental results show that our SSGAM-Net outperforms the current advanced methods, reaching 85.3% in mIoU, which ranges from 4.2 to 58.0% higher than other methods, achieving a competitive level.

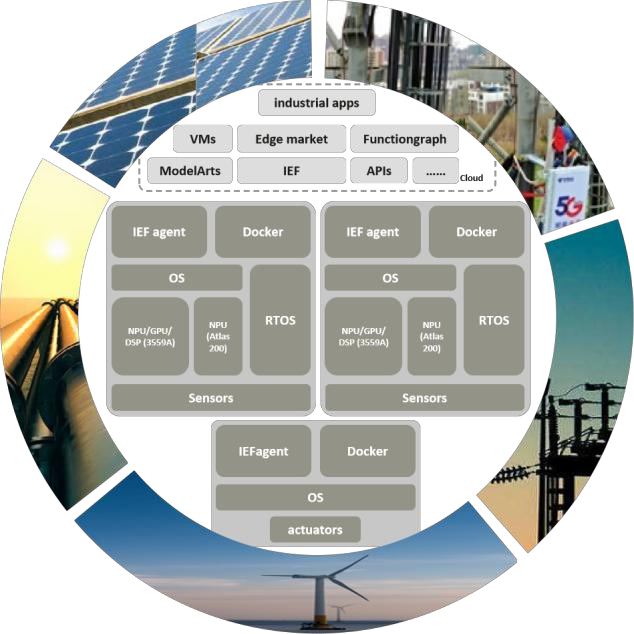

Regular inspections and timely maintenance of the over-head electric power facilities are crucial to national security. As pioneers of intelligent maintenance with UAV based inspection, we have gained insight into the industrial revolution and find the trend of the technology development from IMLocal( Intelligent Maintenance by the local application) to IMCloud(Intelligent Maintenance by Cloud). IMCloud architecture is reported with several shortcomings. In this paper, a next-generation intelligent maintenance system - IMEdge(Intelligent Maintenance using edge-cloud collaboration) is proposed to overcome the shortcomings by 4 primary architectures: edge cloud security, autonomous inspection, collaborative inferencing, and edge collaborations between robots. Further, 3 general latent collaboration cores hidden in the architectures are found in the IMEdge. A vast range of edge- edge collaboration is constructed taking the advantages of the collaboration cores for the electric power industry. Finally, a cross-industry architecture is proposed based on the IMEdge.

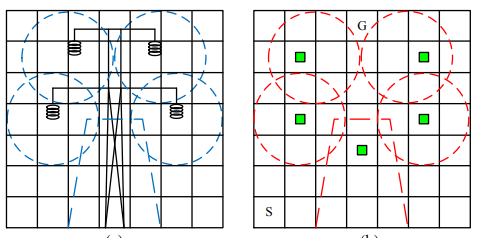

无人机在各种工业基础设施的自主巡检中,需要为大型复杂待巡检对象设计大量的数据采集航点,以获取高质量的全覆盖影像数据。逐点飞行作业能耗高采集效率低,限制了无人机大规模的工业应用。为了优化飞行作业模式,有效提升巡检效率,针对已知待巡检复杂设备结构提出一种全新的多向视点规划算法——连续可微采样的无人机多向视点规划算法(MD-VPP)。该方法在满足全面覆盖巡视目标以及数据采集质量要求的前提下,大幅减少航点数量。首先,将云台俯仰角、偏航角和相机成像面积作为约束条件,构建视点采集连续可微的视图质量目标函数,优化求解候选视点集;然后利用贪心算法求解全覆盖待巡检对象的航点位姿。通过实验对比分析了针对不同巡检对象的航点数量和航点数减少率,相比领域内其他优秀方法,所提方法在确保全覆盖的前提下航点数量至少降低77%,极大地提升了无人机单次作业的数据采集效率。

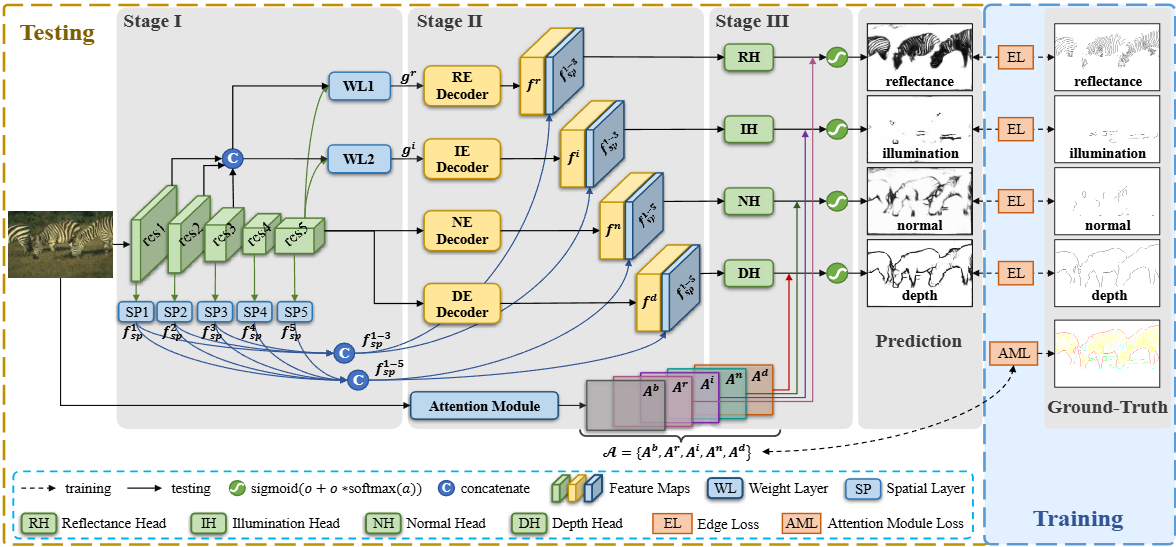

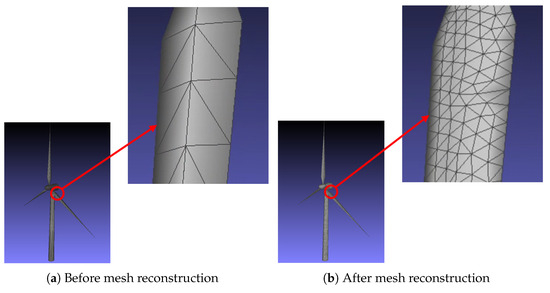

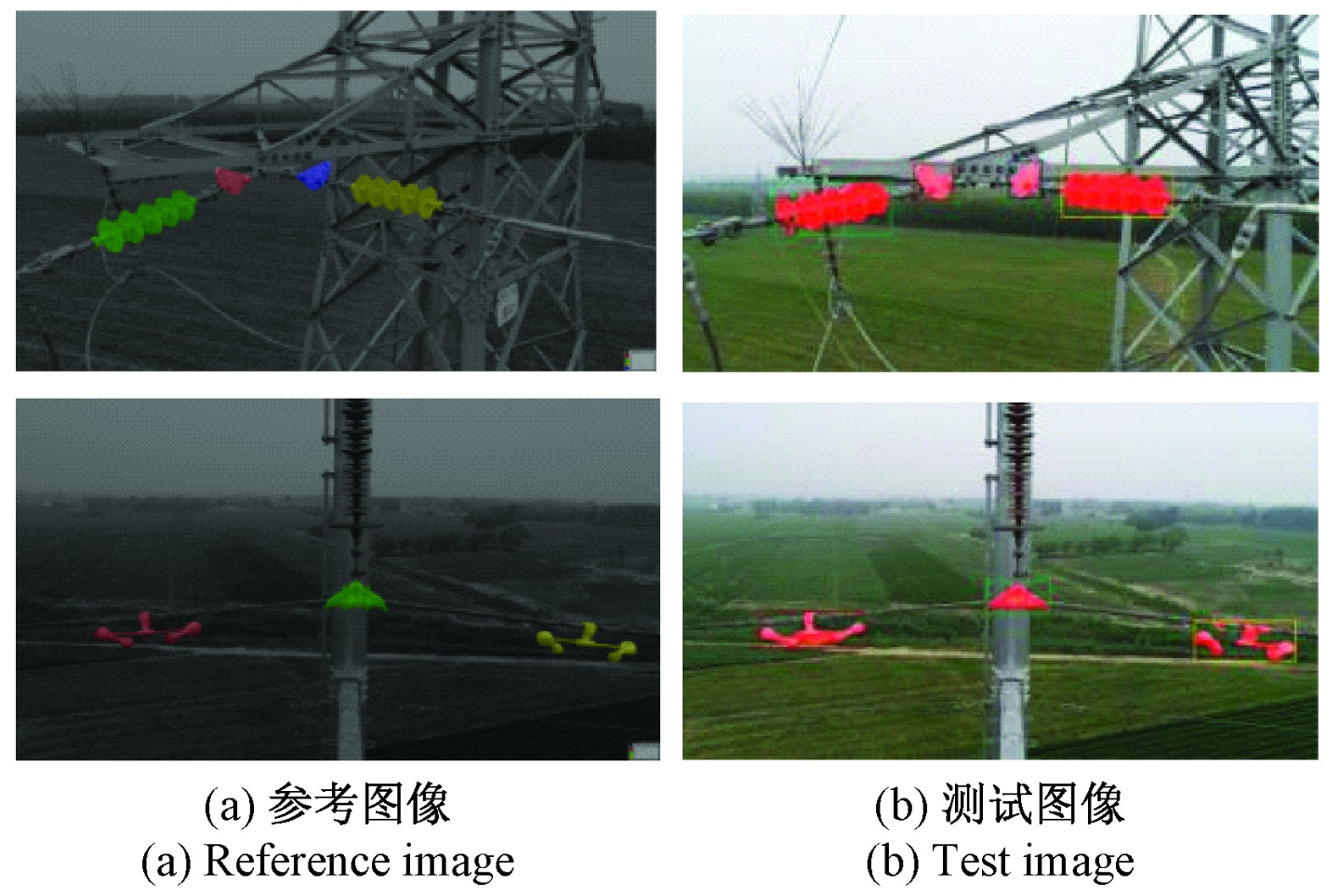

输电杆塔关键弱纹理部件的通用检测方法依赖大量样本的标注和学习。在无相关部件样本训练情况下,本文提出一种可迁移的输电杆塔弱纹理部件检测方法。本文方法结合了孪生神经网络和互相关卷积用于融合样本块与待搜索区域的特征,其中通过样本部件掩膜裁剪以有效滤除背景噪声,最后在尺度、位置、交并比3个方面提出了对应的分数修正策略以提高检测精度。实验结果表明,本文提出的掩膜裁剪和分数修正策略有效提高了弱纹理部件的检出精度,按照AP50的标准,本文检测方法的平均准确率达到了98.0%,能有效避免由于观测视角、尺度以及光照带来的干扰。

Using unmanned aerial vehicles (UAVs) for performing automatic inspection of overhead power lines instead of foot patrols is an attractive option, since doing so is safer and have considerable cost savings, among other advantages. The purpose of this paper is to design a 3D laboratory test-platform to simulate UAVs' inspection of transmission lines and secondly, proposing an automated inspection strategy for UAVs in order to follow transmission lines. The construction and system architecture of our 3D test-platform is described in this paper. The inspection strategy contributes to knowledge pertaining to an automated inspection procedure and includes two steps: flight path planning for UAVs and visual tracking of the transmission lines. The 3D laboratory test-platform is applied to test the performance of the proposed strategy and the tracking results of our inspection strategy are subsequently presented. The availability of the 3D laboratory test-platform and the efficiency of our tracking algorithm are verified by experiments.

This paper presents a novel approach to the initialization of an ego-motion estimation technique for autonomous power line inspection. Dual channel vision, consisting of an infrared and optical camera, is typically adopted during inspection. The infrared camera is far more proficient at reliably detecting heated regions of the power tower which can be regarded as a prior relationship between the tower and cameras. Using the infrared camera, which is equipped parallel to the optical camera, an incomplete correspondence between the optical image and a 3D CAD model is established. Depending on the degree of correspondence, the initial pose of the CAD model in the optical image is estimated through two stages of coarse-to-fine estimation. The primary contributions of this paper include: 1) using dual vision for partial initialization; 2) incorporating two-stage algorithms to estimate an accurate pose quickly; 3) implementing an algorithm which functions correctly regardless of the motion blur or background texture. Experimental results consistently show that the initial pose can be estimated efficiently and robustly

Localisation and mapping with an omnidirectional camera becomes more difficult as the landmark appearances change dramatically in the omnidirectional image. With conventional techniques, it is difficult to match the features of the landmark with the template. We present a novel robot simultaneous localisation and mapping (SLAM) algorithm with an omnidirectional camera, which uses incremental landmark appearance learning to provide posterior probability distribution for estimating the robot pose under a particle filtering framework. The major contribution of our work is to represent the posterior estimation of the robot pose by incremental probabilistic principal component analysis, which can be naturally incorporated into the particle filtering algorithm for robot SLAM. Moreover, the innovative method of this article allows the adoption of the severe distorted landmark appearances viewed with omnidirectional camera for robot SLAM. The experimental results demonstrate that the localisation error is less than 1 cm in an indoor environment using five landmarks, and the location of the landmark appearances can be estimated within 5 pixels deviation from the ground truth in the omnidirectional image at a fairly fast speed.

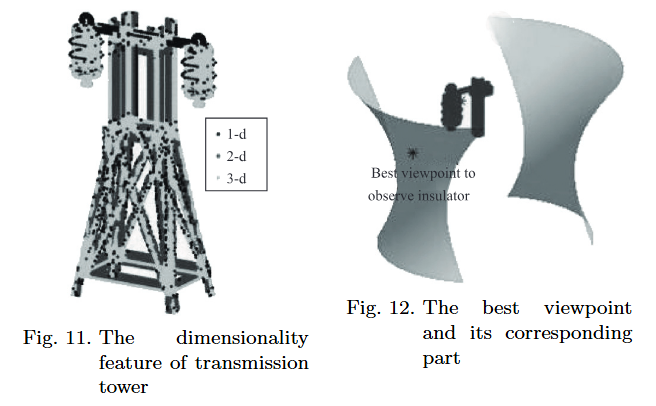

TThis paper presents a method to derive optimal viewpoints for flying robot based transmission tower inspection, which applies point cloud model. A safe envelope is established according to safe transmission tower inspection rules. Essential inspection factors are proposed to evaluate the quality of candidate viewpoints, which includes visibility, dimensionality feature of point cloud as well as the distance between viewpoint and transmission power. A score function is constructed to quantify candidate viewpoints, while multiple attribute decision theory is applied to calculate the weight of each factor. Particle swarm optimization (PSO) is used to find the optimal viewpoints set. Both simulation experiment and practical observations are carried out. The results prove that optimal viewpoints have a great contribution for accurate transmission tower inspection. Final results are compared to patch-based method and proved to be feasible.

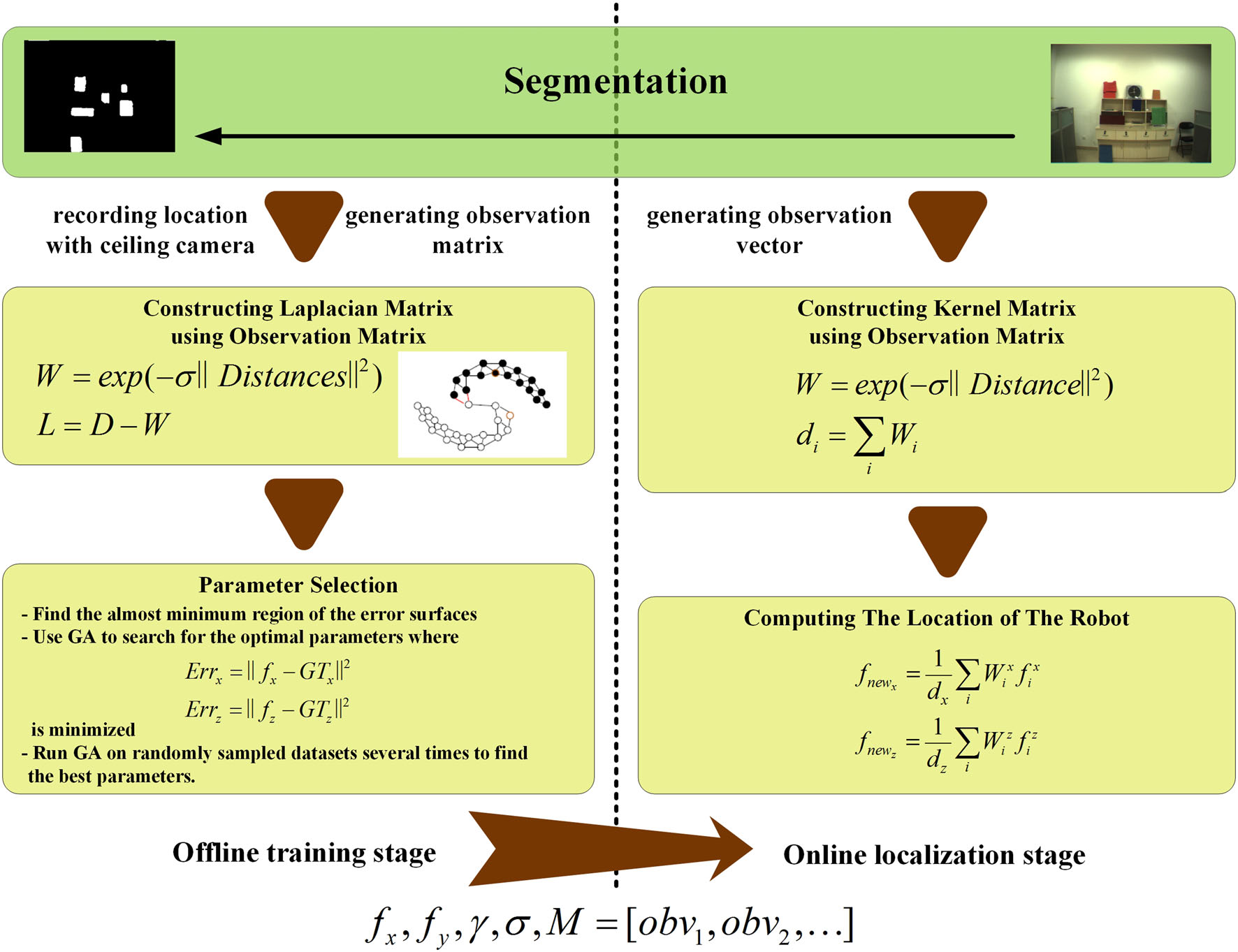

Background/Introduction Robot localization can be considered as a cognition process that takes place during a robot estimating metric coordinates with vision. It provides a natural method for revealing the true autonomy of robots. In this paper, a kernel principal component analysis (PCA)-regularized least-square algorithm for robot localization with uncalibrated monocular visual information is presented. Our system is the first to use a manifold regularization strategy in robot localization, which achieves real-time localization using a harmonic function. Methods The core idea is to incorporate labelled and unlabelled observation data in offline training to generate a regression model smoothed by the intrinsic manifold embedded in area feature vectors. The harmonic function is employed to solve the online localization of new observations. Our key contributions include semi-supervised learning techniques for robot localization, the discovery and use of the visual manifold learned by kernel PCA and some solutions for simultaneous parameter selection. This simultaneous localization and mapping (SLAM) system combines dimension reduction methods, manifold regularization techniques and parameter selection to provide a paradigm of SLAM having self-contained theoretical foundations. Results and Conclusions In extensive experiments, we evaluate the localization errors from the perspective of reducing implementation and application difficulties in feature selection and magnitude ratio determination of labelled and unlabelled data. Then, a nonlinear optimization algorithm is adopted for simultaneous parameter selection. Our online localization algorithm outperformed the state-of-the-art appearance-based SLAM algorithms at a processing rate of 30 Hz for new data on a standard PC with a camera.

The mass and stiffness of cantilever are neglected; thus, the robot and load can be considered as point masses and are assumed to move in a two-dimensional, x-y plane. The coordinates of the trolley and load are (x M , y M ) and (x m , y m ). Triangular membership functions are chosen for the derivative of the robot position error, swing angle, the derivative of the swing angle and force input. A normalized universe of discourse is used for the three inputs and one output of the fuzzy logic controller. Scaling factors k 1, k 2 and k 3 are chosen to convert the three inputs of the system and activate the rule base effectively, while k 4 is selected to activate the system to generate the desired output. To simplify a rule base, the derivative of robot position error, swing angle, the derivative of swing angle and force are partitioned into five primary fuzzy sets as follows:

To localize inspection robot in an in-service substation costs much. How to discriminate its location among highly similar scenes is the main problem of localizing robot in the substation using vision. A novel approach for visual localization using image retrieval and multi-view geometry is proposed. It is applicable for autonomous inspection of in-service substation without additional modifications of the environment. The experimental results demonstrate the efficiency and reliability of our approach. They have been further discussed by means of parameters for image description, number of neighbouring images for coordinates estimation, training dataset selection and performance evaluation. They verified that our approach is a cost-effective solution to robot localization in in-service substation.

In recent years, the UAV (Unmanned Aerial Vehicle) inspection for the electrical line has received increasing attentions due to the advantages of low costs, easiness to control and flexibility. The UAV can inspect the electrical tower independently and automatically by planning the flight path. But during the inspection along the path, the UAV is easily impacted by gust wind due to its light weight and small size, which always leads to the crash into the electrical tower. Thus, in this paper, a safe flight approach (SFA) is proposed to make the flight be safer during the inspection. The main contributions include: firstly, the piecewise linear interpolation method is proposed to fit the distribution curve of the electrical towers based on the GPS coordinates of the electrical towers; secondly, the no-fly zone on the both sides of the distribution curve are created, and a security distance formula (SDF) is raised to decide the width of the no-fly zone; thirdly, a gust wind formula (GWF) is proposed to improve the artificial potential field approach, which can contribute to the path planning of the UAV; finally, a flight path of the UAV can be planned using the SFA to make the UAV avoid colliding with the electric tower. The proposed approach is tested on the experiment to demonstrate its effectiveness.

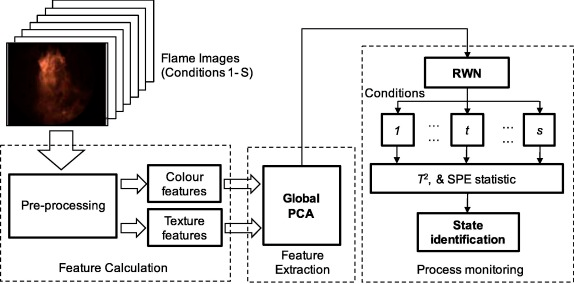

Combustion systems need to be operated under a range of different conditions to meet fluctuating energy demands. Reliable monitoring of the combustion process is crucial for combustion control and optimisation under such variable conditions. In this paper, a monitoring method for variable combustion conditions is proposed by combining digital imaging...

Experimental investigations into the combustion behaviors of single pulverized coal particles are carried out based on high-speed imaging and image processing techniques. A high-speed video camera is employed to acquire the images of coal particles during their residence time in a visual drop tube furnace. Computer algorithms are developed to determine the characteristic parameters of the particles from the images extracted from the videos obtained. The parameters are used to quantify the combustion behaviors of the burning particle in terms of its size, shape, surface roughness, rotation frequency and luminosity. Two sets of samples of the same coal with different particle sizes are studied using the techniques developed. Experimental results show that the coal with different particle sizes exhibits distinctly different combustion behaviors. In particular, for the large coal particle (150–212 μm), the combustion of volatiles and char takes place sequentially with clear fragmentation at the early stage of the char combustion. For the small coal particle (106–150 μm), however, the combustion of volatiles and char occurs simultaneously with no clear fragmentation. The size of the two burning particles shows a decreasing trend with periodic variation attributed to the rapid rotations of the particles. The small particle rotates at a frequency of around 30 Hz, in comparison to 20 Hz for the large particle due to a greater combustion rate. The luminous intensity of the large particle shows two peaks, which is attributed to the sequential combustion of volatiles and char. The luminous intensity of the small particle illustrates a monotonously decreasing trend, suggesting again a simultaneous devolatilization/volatile and char combustion.

Given a large set of unlabeled images taken by drones for power transmission line inspection, how can we efficiently and cost-friendly develop and deploy defect detection models with high accuracy? To date, power transmission line inspection is increasingly based on drones and there is an expectation of over 100K images taken daily in 2020. These images, in current practice, are generally inspected by inspection engineers. In this paper, we present LeapDetect, an agile platform for rapidly developing, deploying and iteratively upgrading detection models for drone-based power transmission line inspection. The platform consists of a labeling system, an online detection service system, and a training system to cover a complete life cycle of a new coming detection task. It supports building models from a cold start or by an upgrade. LeapDetect has helped us to develop a couple of object detection models for power transmission line inspection. We have demonstrated how LeapDetect helps develop and iteratively upgrade models for power transmission line inspection with two case studies (i.e., bird nest and split pin).

Accurate State of Charge (SOC) and State of Health (SOH) estimation is crucial to ensure safe and reliable operation of battery systems. Considering the intrinsic couplings between SOC and SOH, a joint estimation framework is preferred in real-life applications where batteries degrade over time. Yet, it faces a few challenges such as limited measurements of key parameters such as strain and temperature distributions, difficult extraction of suitable features for modeling, and uncertainties arising from both the measurements and models. To address these challenges, this paper first uses Fiber Bragg Grating (FBG) sensors to obtain more process related signals by attaching them to the cell surface to capture multi-point strain and temperature variation signals due to battery charging/discharging operations. Then a hybrid machine learning framework for joint estimation of SOC and capacity (a key indicator of SOH) is developed, which uses a convolutional neural network combined with the Gaussian Process Regression method to produce both mean and variance information of the state estimates, and the joint estimation accuracy is improved by automatic extraction of useful features from the enriched measurements assisted with FBG sensors. The test results verify that the accuracy and reliability of the SOC estimation can be significantly improved by updating the capacity estimation and utilizing the FBG measurements, achieving up to 85.58% error reduction and 42.7% reduction of the estimation standard deviation.

Online battery capacity estimation is a critical task for battery management system to maintain the battery performance and cycling life in electric vehicles and grid energy storage applications. Convolutional Neural Networks, which have shown great potentials in battery capacity estimation, have thousands of parameters to be optimized and demand a substantial number of battery aging data for training. However, these parameters require massive memory storage while collecting a large volume of aging data is time-consuming and costly in real-world applications. To tackle these challenges, this paper proposes a novel framework incorporating the concepts of transfer learning and network pruning to build compact Convolutional Neural Network models on a relatively small dataset with improved estimation performance. First, through the transfer learning technique, the Convolutional Neural Network model pre-trained on a large battery dataset is transferred to a small dataset of the targeted battery to improve the estimation accuracy. Then a contribution-based neuron selection method is proposed to prune the transferred model using a fast recursive algorithm, which reduces the size and computational complexity of the model while maintaining its performance. The proposed model is capable of achieving fast online capacity estimation at any time, and its effectiveness is verified on a target dataset collected from four Lithium iron phosphate battery cells, and the performance is compared with other Convolutional Neural Network models. The test results confirm that the proposed model outperforms other models in terms of accuracy and computational efficiency, achieving up to 68.34% model size reduction and 80.97% computation savings.

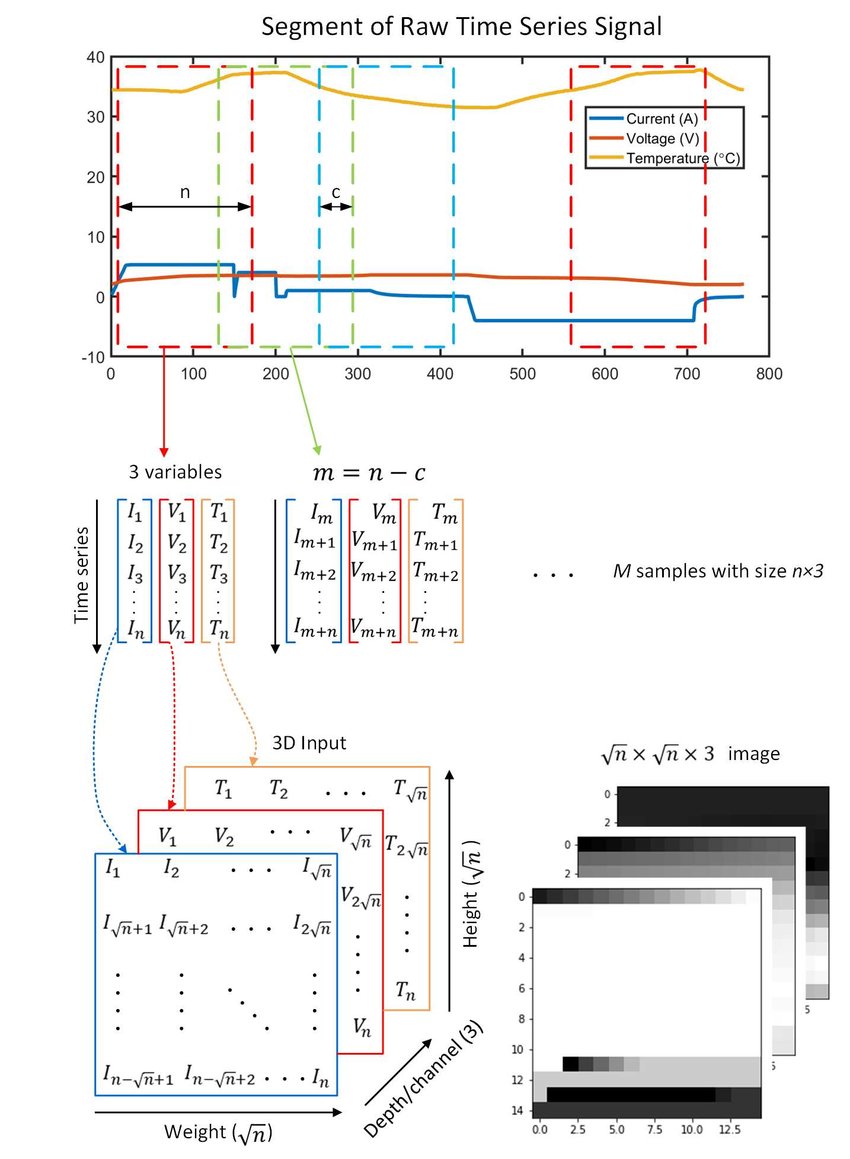

Lithium-ion batteries have been widely used in electric vehicles, smart grids and many other applications as energy storage devices, for which the aging assessment is crucial to guarantee their safe and reliable operation. The battery capacity is a popular indicator for assessing the battery aging, however, its accurate estimation is challenging due to a range of time-varying situation-dependent internal and external factors. Traditional simplified models and machine learning tools are difficult to capture these characteristics. As a class of deep neural networks, the convolutional neural network (CNN) is powerful to capture hidden information from a huge amount of input data, making it an ideal tool for battery capacity estimation. This paper proposes a CNN-based battery capacity estimation method, which can accurately estimate the battery capacity using limited available measurements, without resorting to other offline information. Further, the proposed method only requires partial charging segment of voltage, current and temperature curves, making it possible to achieve fast online health monitoring. The partial charging curves have a fixed length of 225 consecutive points and a flexible starting point, thereby short-term charging data of the battery charged from any initial state-of-charge can be used to produce accurate capacity estimation. To employ CNN for capacity estimation using partial charging curves is however not trivial, this paper presents a comprehensive approach covering time series-to-image transformation, data segmentation, and CNN configuration. The CNN-based method is applied to two battery degradation datasets and achieves root mean square errors (RMSEs) of less than 0.0279 Ah (2.54%) and 0.0217 Ah (2.93% ), respectively, outperforming existing machine learning methods.

已经到底了!

学术活动

This special issue features original studies on pretraining, including fundamental and cutting-edge theories and applications on language models, graphs/social networks, computer vision, knowledge graphs, and recommender systems.

基于个性化视线估计的孤独症谱系障碍儿童早期筛查方法研究

孤独症谱系障碍(ASD)是一种以社交缺陷(社会交往和互动缺陷等)和非社交缺陷(兴趣狭窄和刻板行为等)为主要特征的神经发育障碍。是全球患病人数增长最快的严重疾病之一,也是儿童青少年致残的主要因素之一。目前ASD缺乏客观的诊断依据,只能根据症状学特征、病史资料和社会功能来确定,因此不能对疾病进行及时诊断和干预,也阻碍了对疾病的深入探索。注视方向是人们在感受视觉刺激后其注意力与内心活动的真实重要表征。而孤独症儿童的行为异常(共情和系统化水平异常)与其视觉注意力模式密切相关。通过视线估计技术获取被试儿童浏览指定眼动范式时的注视方向,再利用人工智能相关技术设计识别模型对其注视信息进行分析,可以直接有效地评估被试儿童的视觉注意力模式并反映其共情系统化水平,以实现对儿童孤独症的早期客观筛查。目前,孤独症眼动筛查相关研究还处于理论论证的初期阶段,其研究时间较短、研究方法不太成熟、研究成果不甚理想。现有方法中普遍存在注视信息预测偏差较大与诊断方法低效固化等问题,严重制约了相关技术在日常生活及临床上的应用。本报告将从ASD儿童的个性化视线估计方法研究以及基于视线信息的ASD辅助诊断模型研究两方面介绍本团队的部分研究工作。